Samsung SDS Brightics, an AI Accelerator for Automating and Accelerating Deep Learning Training

Training AI models is an extremely time-consuming process. Without proper insight into a feasible alternative to time-consuming development and migration of model training to exploit the power of large, distributed clusters, training projects remain considerably long lasting. To address these issues, Samsung SDS developed the Brightics AI Accelerator. The Kubernetes-based, containerized application, is now available on the NVIDIA NGC catalog – a GPU-optimized hub for AI and HPC containers, pre-trained models, industry SDKs, and Helm charts that helps simplify and accelerate AI development and deployment processes.

The Samsung SDS Brightics AI Accelerator application automates machine learning, speeds up model training and improves model accuracy with key features such as automated feature engineering, model selection, and hyper-parameter tuning without requiring infrastructure development and deployment expertise. Brightics AI Accelerator can be used in many industries such as healthcare, manufacturing, retail, automotive and across different use cases spanning computer vision, natural language processing and more.

Key Features and Benefits:

- Is case agnostic and covers training all AI models by applying autoML to tabular, CSV, time-series, image or natural language data to enable analytics; image classification, detection, and segmentation; and NLP use cases.

- Offers model portability between cloud and on-prem data centers and provides a unified interface for orchestrating large, distributed clusters to train deep learning models using Tensorflow, Keras and PyTorch frameworks as well as autoML using SciKit-Learn.

- AutoML software automates and accelerates model training on tabular data by using automated model selection from Scikit-Learn, automated feature synthesis, and hyper-parameter search optimization.

- Automated Deep Learning (AutoDL) software automates and accelerates deep learning model training using data-parallel, distributed synchronous Horovod Ring-All-Reduce Keras, TensorFlow, and PyTorch frameworks with minimal code. AutoDL exploits up to 512 NVIDIA GPUs per training job to produce a model in 1 hour versus 3 weeks using traditional methods.

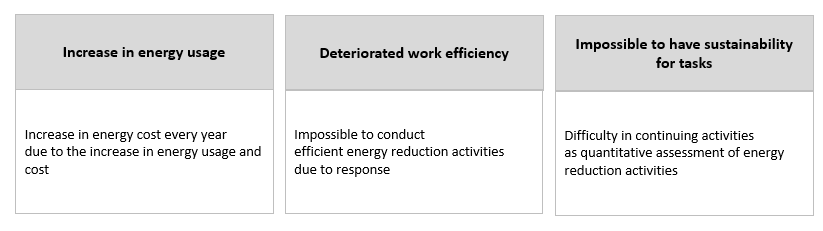

This electronics manufacturer pays USD 135 million for year as an energy expense. Also, energy efficiency was an important task to secure competitiveness in manufacturing as their energy cost kept increasing.

The partner thought it is essential to do the activities to analyze the energy consumption status and energy efficiency by process and facility.

However, the partner is producing various products and has a variety of facilities in a large workplace.

That disables the partner to respond to the challenges with limited on-site activities by relevant workforce.

To that end, there was a need for the system to analyze the energy consumption status and energy efficiency real time.

The partner also wanted to conduct sustainable activities for energy reduction by analyzing causes of efficiency change and establishing an immediate response system.

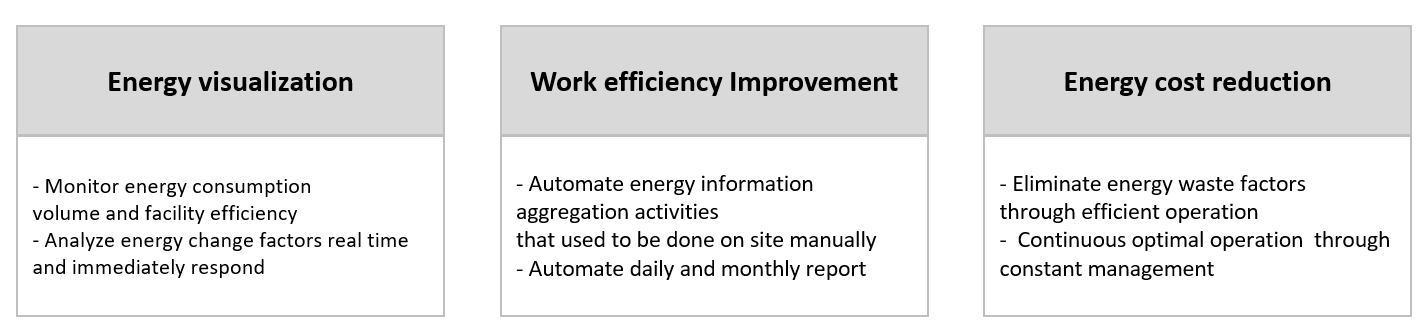

Energy-related data integration and integrated energy management system for the entire company have been implemented.

[Energy monitoring]

ㆍ Automatically gather for energy usage & operation data, enhance data integrity

ㆍ Visualize real-time energy usage by process and facility

ㆍ Visualize energy intensity by business division, factory and product

[Analyze energy efficiency & cause of fluctuation]

ㆍ Develop models to analyze facilities that consume a lot of excessive energy

ㆍ Draw efficiency variables and optimal values

[Establish management systems for energy estimation]

ㆍ Predict energy consumption based on energy usage data analytics

ㆍ Establish energy reduction plan & activities based on forecast value

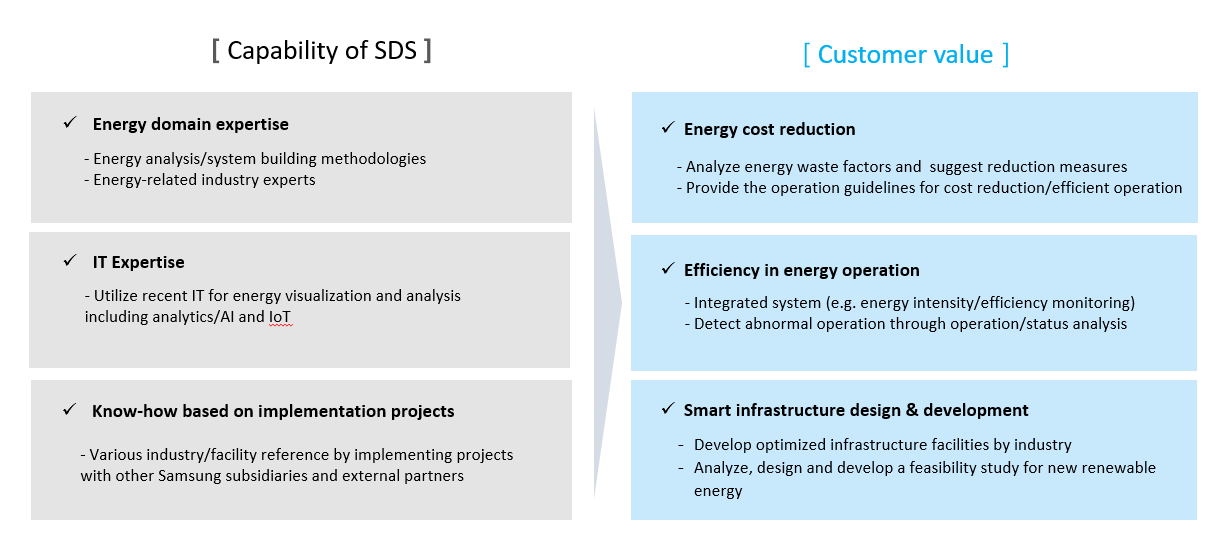

SDS Energy Service

Samsung SDS provides services from energy consulting to total services regarding infrastructure and system development depending on business type.

■ Energy consulting: energy diagnosis and analysis of consumption patterns, establishment of optimization measures

■ Measuring infrastructure establishment: establishment infrastructure as well as measures for IoT-based infrastructure to effectively manage energy

■ Integrated data management : Data gathering, cleansing and saving from various IoT equipment/systems

■ Data analysis: Data modeling and predictive simulation for AI-based efficiency analysis

■ Integrated management system implementation: development of control systems for energy plan/performance management, energy efficiency analysis and optimal operation

Partners have secured the ground for energy reduction through energy visualization at workplaces, which enables them to have reasonable operation of the facilities and systems.

Reducing energy usage has been a major focus for us over the past 10 years: we have built our own super-efficient servers at Google, invented more efficient ways to cool our data centres and invested heavily in green energy sources, with the goal of being powered 100 percent by renewable energy. Compared to five years ago, we now get around 3.5 times the computing power out of the same amount of energy, and we continue to make many improvements each year.

Major breakthroughs, however, are few and far between - which is why we are excited to share that by applying DeepMind’s machine learning to our own Google data centres, we’ve managed to reduce the amount of energy we use for cooling by up to 40 percent. In any large scale energy-consuming environment, this would be a huge improvement. Given how sophisticated Google’s data centres are already, it’s a phenomenal step forward.

The implications are significant for Google’s data centres, given its potential to greatly improve energy efficiency and reduce emissions overall. This will also help other companies who run on Google’s cloud to improve their own energy efficiency. While Google is only one of many data centre operators in the world, many are not powered by renewable energy as we are. Every improvement in data centre efficiency reduces total emissions into our environment and with technology like DeepMind’s, we can use machine learning to consume less energy and help address one of the biggest challenges of all - climate change.

One of the primary sources of energy use in the data centre environment is cooling. Just as your laptop generates a lot of heat, our data centres - which contain servers powering Google Search, Gmail, YouTube, etc. - also generate a lot of heat that must be removed to keep the servers running. This cooling is typically accomplished via large industrial equipment such as pumps, chillers and cooling towers. However, dynamic environments like data centres make it difficult to operate optimally for several reasons:

- The equipment, how we operate that equipment, and the environment interact with each other in complex, nonlinear ways. Traditional formula-based engineering and human intuition often do not capture these interactions.

- The system cannot adapt quickly to internal or external changes (like the weather). This is because we cannot come up with rules and heuristics for every operating scenario.

- Each data centre has a unique architecture and environment. A custom-tuned model for one system may not be applicable to another. Therefore, a general intelligence framework is needed to understand the data centre’s interactions.

To address this problem, we began applying machine learning two years ago to operate our data centres more efficiently. And over the past few months, DeepMind researchers began working with Google’s data centre team to significantly improve the system’s utility. Using a system of neural networks trained on different operating scenarios and parameters within our data centres, we created a more efficient and adaptive framework to understand data centre dynamics and optimize efficiency.

We accomplished this by taking the historical data that had already been collected by thousands of sensors within the data centre - data such as temperatures, power, pump speeds, setpoints, etc. - and using it to train an ensemble of deep neural networks. Since our objective was to improve data centre energy efficiency, we trained the neural networks on the average future PUE (Power Usage Effectiveness), which is defined as the ratio of the total building energy usage to the IT energy usage. We then trained two additional ensembles of deep neural networks to predict the future temperature and pressure of the data centre over the next hour. The purpose of these predictions is to simulate the recommended actions from the PUE model, to ensure that we do not go beyond any operating constraints.

We tested our model by deploying on a live data centre. The graph below shows a typical day of testing, including when we turned the machine learning recommendations on, and when we turned them off.

Our machine learning system was able to consistently achieve a 40 percent reduction in the amount of energy used for cooling, which equates to a 15 percent reduction in overall PUE overhead after accounting for electrical losses and other non-cooling inefficiencies. It also produced the lowest PUE the site had ever seen.

Because the algorithm is a general-purpose framework to understand complex dynamics, we plan to apply this to other challenges in the data centre environment and beyond in the coming months. Possible applications of this technology include improving power plant conversion efficiency (getting more energy from the same unit of input), reducing semiconductor manufacturing energy and water usage, or helping manufacturing facilities increase throughput.